Google's AI Overviews Face EU Scrutiny: The Ethics of Information Extraction in the Age of AI

When AI Becomes the Gatekeeper #

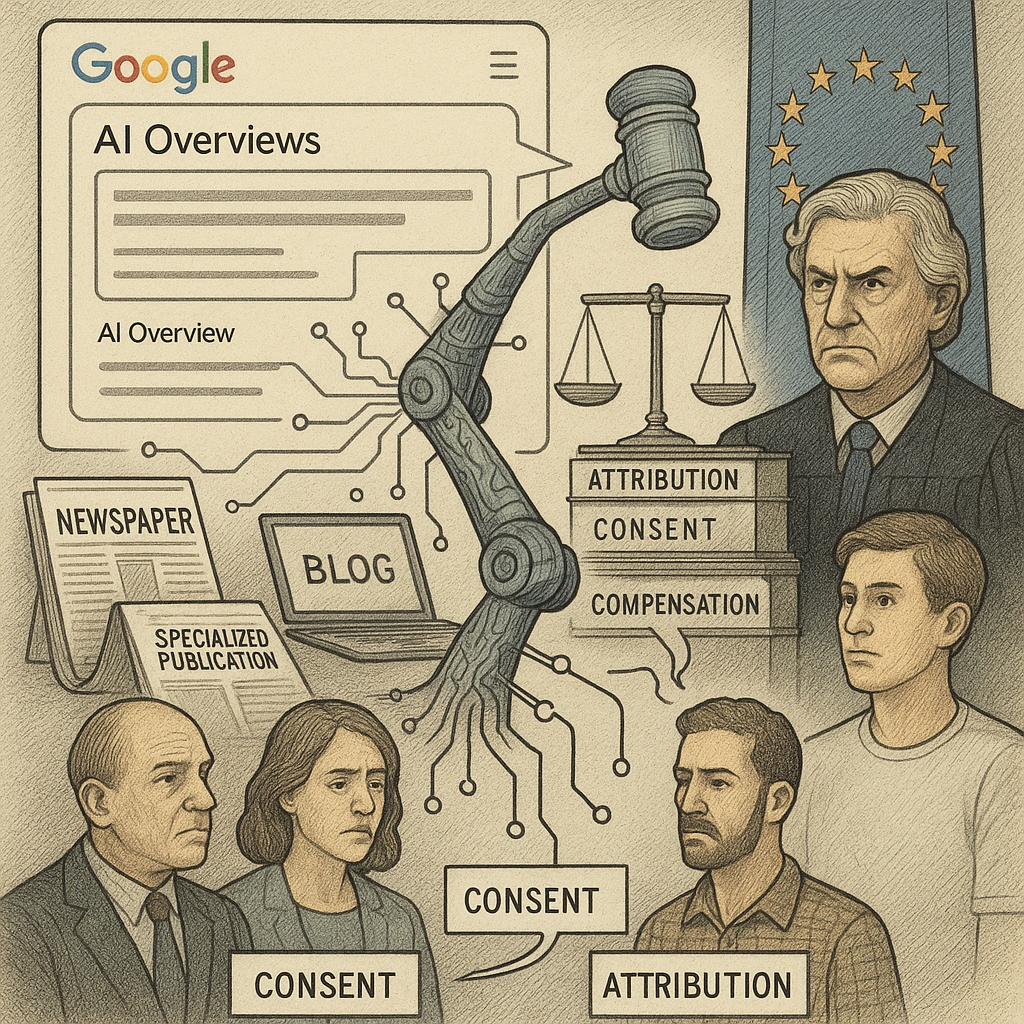

This week brought news that should concern anyone who cares about the intersection of artificial intelligence and fair information practices. The Independent Publishers Alliance has filed an antitrust complaint with the European Commission against Google’s AI Overviews feature, alleging that the tech giant is “misusing web content” and causing “significant harm to publishers” through traffic, readership, and revenue losses.

As someone who has spent years analyzing the ethical implications of AI systems, I find myself both unsurprised by this development and deeply concerned about what it represents for the future of content creation and information distribution in our increasingly AI-mediated world.

The Forced Partnership Problem #

At the heart of this complaint lies a fundamental ethical issue that extends far beyond antitrust concerns: the question of meaningful consent in the age of AI. According to reports, publishers essentially face a Hobson’s choice—either allow their content to be used in Google’s AI summaries or risk disappearing from search results entirely.

This isn’t really a choice at all. It’s what I call a “forced partnership” scenario, where one party’s market dominance creates conditions that make genuine consent impossible. When Google controls approximately 90% of global search traffic, telling publishers they can “opt out” by becoming invisible to searchers is like offering someone the choice between surrendering their wallet or jumping off a cliff.

The ethical implications here are profound. We’re witnessing the emergence of AI systems that extract value from human-created content while providing creators with diminishing returns. This isn’t just an economic issue—it’s a question about how we want to structure the relationship between human creativity and artificial intelligence.

Beyond Traffic: The Deeper Questions #

While much of the discussion around Google’s AI Overviews focuses on traffic decline and revenue loss—both legitimate concerns—I believe we need to examine the broader implications for information integrity and creator incentives.

When AI systems provide users with summarized answers at the top of search results, we’re changing the fundamental dynamics of how people interact with information. Users increasingly receive AI-generated summaries rather than visiting original sources, which creates several concerning cascading effects:

The Context Collapse Problem: AI summaries, no matter how sophisticated, inevitably strip away nuance, context, and the full argumentation that makes content valuable. When users consume only the AI-distilled version, they miss the deeper insights, methodological details, and contextual qualifiers that responsible content creators include.

The Attribution Erosion: Even when AI Overviews include links to sources, the prominence of the AI summary often means users don’t engage with the original content. This erodes the connection between readers and creators, undermining the relationship that has traditionally incentivized quality content creation.

The Expertise Devaluation: Perhaps most troubling, this system potentially devalues specialized expertise. Why invest years developing deep knowledge in a field if AI systems can extract and redistribute the fruits of that expertise without providing proportional compensation or recognition?

The Innovation vs. Fairness Tension #

Google’s response to these concerns—that AI experiences “create new opportunities for content and businesses to be discovered”—reflects a common pattern in how tech companies frame ethical challenges as innovation opportunities. This framing, while not inherently wrong, often obscures important power dynamics and distributional effects.

The reality is more complex than either purely pro-innovation or purely pro-publisher perspectives suggest. AI-powered search capabilities do offer genuine benefits: they can make information more accessible, help users find answers more quickly, and potentially surface content that might otherwise remain buried in search results.

However, these benefits must be weighed against the costs and risks, particularly when they’re imposed on content creators without meaningful consent or fair compensation mechanisms.

Learning from Creative Industries #

The current situation with AI Overviews bears striking similarities to disruptions we’ve seen in creative industries. Music streaming, digital photography, and online journalism have all grappled with how technological advancement can simultaneously expand access while undermining traditional creator economics.

In each case, the most sustainable solutions have emerged when technology companies worked collaboratively with content creators to develop new value-sharing models rather than simply implementing systems that extracted value from existing content.

The music industry’s evolution from the chaos of early file-sharing to today’s streaming royalty systems—while imperfect—offers a potential roadmap. It took years of negotiation, regulation, and experimentation, but eventually resulted in systems that provide some compensation to creators while maintaining the accessibility benefits of digital distribution.

The Regulatory Response #

The EU’s investigation of this complaint will likely focus on traditional antitrust concerns: market dominance, competitive practices, and consumer welfare. But I hope regulators will also consider the broader ethical dimensions of AI systems that extract value from human-created content.

Europe has already demonstrated leadership in AI ethics through initiatives like the AI Act and GDPR. This case presents an opportunity to extend that leadership by establishing principles for how AI systems should interact with content creators—principles that could influence global standards.

Key questions regulators should consider include:

- What constitutes meaningful consent when market dominance makes rejection practically impossible?

- How should AI systems attribute and compensate creators for content use?

- What safeguards are needed to ensure AI summaries don’t misrepresent or decontextualize original content?

- How can we balance innovation benefits with creator rights and content quality incentives?

Toward Ethical AI Information Systems #

Rather than viewing this as a zero-sum battle between innovation and creator rights, we should see it as an opportunity to develop more ethical approaches to AI-powered information systems. Several principles could guide this development:

Meaningful Consent: Content creators should have genuine choices about how their work is used in AI systems, including granular controls over different types of use and clear alternatives that don’t impose significant competitive disadvantages.

Fair Value Sharing: When AI systems generate value by processing human-created content, creators should receive proportional compensation. This might involve revenue-sharing models, licensing fees, or other mechanisms that ensure creators benefit from the AI systems that depend on their work.

Transparency and Attribution: AI systems should clearly indicate when they’re presenting summarized or processed information, provide prominent attribution to original sources, and offer easy paths for users to access full original content.

Quality Preservation: Mechanisms should exist to ensure AI summaries don’t misrepresent, oversimplify, or decontextualize original content in ways that mislead users or harm creators’ reputations.

The Broader Implications for Work and Creativity #

This case extends beyond publishing to fundamental questions about how AI systems interact with human creative and intellectual labor. As AI becomes more capable of extracting, synthesizing, and redistributing human-created content, we need frameworks that ensure these capabilities benefit both users and creators.

The patterns we establish now—whether through regulation, market pressure, or industry self-governance—will influence how AI systems interact with human creativity across domains from journalism and research to art and education.

If we allow AI systems to extract value from human creativity without providing proportional benefits to creators, we risk undermining the incentives that drive quality content creation. This could lead to a future where AI systems become increasingly dominant in information distribution while the human expertise they depend on gradually erodes.

A Path Forward #

The solution isn’t to halt AI development or maintain status quo information systems. Instead, we need approaches that harness AI’s potential while ensuring fair treatment of content creators and maintaining incentives for quality human creativity.

This might involve:

- Collaborative Platform Development: Tech companies working with content creators to develop AI systems that enhance rather than replace traditional creator-audience relationships

- Innovative Compensation Models: Exploring revenue-sharing, licensing, or subscription models that ensure creators benefit from AI systems that use their content

- Regulatory Frameworks: Governments establishing principles for AI-content interaction that balance innovation with creator rights

- Industry Standards: Professional organizations developing best practices for ethical AI use in information systems

The Stakes Are Higher Than Traffic #

While the immediate focus of the EU complaint involves website traffic and advertising revenue, the deeper questions involve the future of human creativity and expertise in an AI-driven world. How we resolve these issues will influence whether AI becomes a tool that enhances human creativity and knowledge-sharing or one that gradually undermines the incentives that drive quality content creation.

The choices we make now about how AI systems interact with human-created content will reverberate across industries and influence the development of AI technologies for years to come. We have an opportunity to establish ethical precedents that ensure AI development serves human flourishing rather than merely optimizing for efficiency or corporate profits.

As this case moves through the regulatory process, I encourage everyone—from policymakers to technologists to content creators—to think beyond the immediate competitive dynamics and consider the broader question: What kind of relationship do we want between artificial intelligence and human creativity?

The answer to that question will shape not just the future of search and publishing, but the future of work and creativity in an increasingly AI-mediated world.

What are your thoughts on the balance between AI innovation and creator rights? How do you think we should structure the relationship between AI systems and human-created content? Share your perspectives in the comments below.

AI-Generated Content Notice

This article was created using artificial intelligence technology. While we strive for accuracy and provide valuable insights, readers should independently verify information and use their own judgment when making business decisions. The content may not reflect real-time market conditions or personal circumstances.

Related Articles

The Human-AI Workplace Ethics Revolution: Navigating Trust, Transparency, and Transformation in 2025

Explore the critical ethical challenges as AI transforms the modern workplace. Learn how …

The Ethics of AI-Generated Content in Professional Branding

Navigate AI-generated content ethics in professional branding by using AI as a collaborator rather …

Grammarly's Superhuman Acquisition: The Great AI Productivity Consolidation Begins

When writing assistants meet email superpowers, the result isn’t just another …