The AI Validation Gap in Radiology: When Innovation Outpaces Clinical Integration

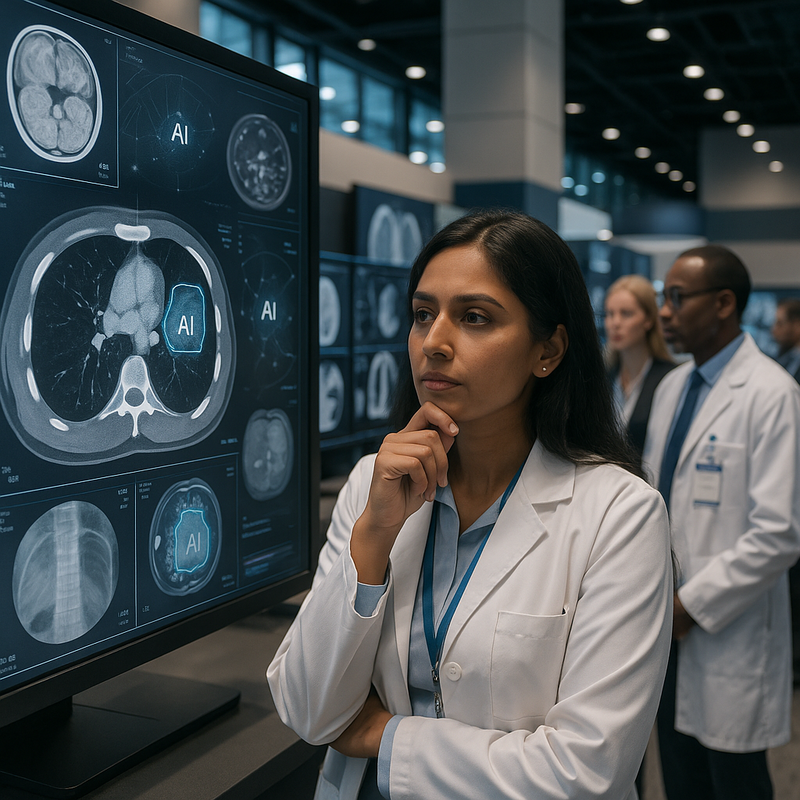

Walking through the AI showcase at this year’s RSNA conference in Chicago, I was struck by the sheer scale of the commercial push behind artificial intelligence in radiology. Over 100 companies occupied an area larger than two football fields, each promising to revolutionize medical imaging with their algorithms. Massive displays demonstrated AI detecting fractures in X-rays, identifying breast cancer in mammograms, measuring brain atrophy in MRIs, and flagging cardiac blockages in CT scans. The spectacle was impressive—and concerning.

As someone who has spent fifteen years working at the intersection of AI and healthcare, I’ve learned to look beyond the marketing materials. What I observed at RSNA 2025 crystallizes a challenge I’ve been wrestling with for years: the breathtaking pace of AI innovation in medical imaging has created a validation gap that could undermine the very promise these technologies hold.

The Showcase vs. The Science #

The atmosphere at McCormick Place last week felt more like a consumer electronics show than a medical conference. Major hardware manufacturers—GE, Philips, and Siemens, the “GPS” of radiology—integrated AI into seemingly every imaging modality. Sales representatives offered cappuccinos and smoothies while demonstrating AI-assisted ultrasound on hired patients. A three-dimensional heart model, larger than a human head, pulsed with neon light to showcase one company’s cardiac imaging AI. Attendees posed for selfies in front of multi-story booth displays.

This marketing spectacle obscures a more sobering reality: we’re deploying AI systems in clinical settings faster than we can rigorously validate their performance, understand their limitations, or properly integrate them into existing workflows. The gap between innovation velocity and clinical evidence isn’t just an academic concern—it has direct implications for patient care.

According to recent reporting from STAT News, the proliferation of AI tools at RSNA 2025 represents the culmination of years of rapid commercialization in medical imaging AI. Yet many radiologists I spoke with at the conference expressed the same underlying anxiety: how do we know which of these hundreds of tools actually improve patient outcomes?

The Clinical Validation Challenge #

The fundamental problem is one of timescales. AI development cycles have compressed dramatically. Companies can now train, refine, and deploy diagnostic algorithms in months. Meanwhile, rigorous clinical validation—the kind that builds confidence in real-world performance—requires years of careful study across diverse patient populations and clinical settings.

The FDA has approved over 500 AI/ML-enabled medical devices, with radiology representing the largest category. This rapid regulatory pace reflects an attempt to keep up with innovation rather than a comprehensive validation framework. FDA clearance demonstrates that an AI tool meets certain safety standards and performs as intended under controlled conditions. It doesn’t necessarily prove the tool improves diagnostic accuracy, reduces radiologist workload, or enhances patient outcomes in the messy reality of clinical practice.

My research team at Stanford Medicine has documented this validation gap repeatedly. Algorithms that achieve 95% accuracy on carefully curated training datasets often perform significantly worse when deployed in hospitals serving different patient populations. An AI tool trained primarily on imaging data from one demographic group may underperform—sometimes dangerously—when applied to patients with different genetic backgrounds, body compositions, or disease presentations.

This isn’t a theoretical concern. I’ve witnessed AI systems flag false positives at rates that overwhelm radiologists rather than assist them. I’ve seen tools fail to detect pathology in patients from underrepresented groups because the training data lacked diversity. These failures don’t always show up in initial validation studies, which is precisely why the current pace of deployment troubles me.

The Integration Imperative #

Even when AI tools perform well in isolation, integrating them into clinical workflows presents enormous challenges. The RSNA showcase featured dozens of point solutions—specialized algorithms for detecting specific pathologies. But radiologists don’t work with single-purpose tools. They interpret complex cases requiring assessment across multiple body systems, consideration of patient history, and synthesis of findings from various imaging modalities.

Imagine a radiologist reviewing a chest CT scan. She might need to evaluate the lungs for nodules, check the heart for calcification, assess the bones for fractures or metastases, examine the liver edges visible in the scan, and consider how findings relate to the patient’s clinical presentation. If she must consult five different AI tools, each with its own interface, approval workflow, and documentation requirements, the technology becomes a burden rather than an aid.

The integration challenge extends beyond user interfaces. Healthcare systems must grapple with questions of liability when AI misses a diagnosis or generates a false alarm. Who’s responsible when an algorithm fails—the radiologist who relied on it, the hospital that deployed it, or the vendor that developed it? These questions lack clear answers, creating hesitancy around adoption even among clinicians who recognize AI’s potential.

Training represents another integration hurdle. Radiologists need to understand not just how to use AI tools, but how to interpret their outputs critically, recognize their limitations, and maintain diagnostic skills that might atrophy with over-reliance on automation. This requires a fundamental shift in radiology education and continuing professional development—a shift that medical institutions are only beginning to address.

Real-World Consequences #

The validation gap has tangible consequences for both patients and clinicians. For patients, premature deployment of inadequately validated AI risks missed diagnoses, unnecessary procedures from false positives, and erosion of trust in medical imaging. The promise of AI in healthcare—democratizing access to expert-level diagnostics and catching diseases earlier—depends entirely on these systems performing reliably across all patient populations.

For radiologists, the current environment creates impossible pressures. They’re told AI represents the future of their profession, that they must adopt these tools or risk obsolescence. Yet they’re also held to the highest standards of diagnostic accuracy, legally and ethically responsible for every interpretation. How can they confidently integrate AI systems whose real-world performance characteristics remain incompletely understood?

I’ve spoken with radiologists who describe a sense of whiplash—simultaneously expected to embrace AI innovation while maintaining vigilance against its failures. Some feel overwhelmed by the sheer number of tools becoming available, each requiring evaluation and potential workflow changes. Others worry that relying too heavily on AI will erode the pattern recognition skills and clinical judgment that define expert radiology practice.

A Path Forward #

The solution isn’t to slow innovation or reject AI’s potential. Rather, we need alignment between development cycles and validation timelines. This requires several coordinated efforts:

First, we need standardized frameworks for prospective clinical validation that go beyond initial FDA clearance. These frameworks should require demonstration of improved patient outcomes, not just algorithmic accuracy. They should mandate testing across diverse patient populations before broad deployment.

Second, we must develop better integration strategies that combine multiple AI capabilities into coherent clinical decision support systems rather than expecting radiologists to juggle dozens of point solutions. The goal should be augmenting clinical judgment, not fragmenting it.

Third, medical education must evolve to prepare radiologists for AI-augmented practice. This means teaching critical evaluation of AI outputs, understanding of algorithmic limitations, and maintenance of core diagnostic skills. It also requires ongoing education as AI capabilities evolve.

Fourth, we need clearer liability frameworks that encourage responsible AI adoption without exposing clinicians to untenable risk. This likely requires new models of shared responsibility between developers, healthcare institutions, and clinicians.

Finally, we must resist the commercial pressure to deploy AI for deployment’s sake. The measure of success shouldn’t be the number of AI tools implemented, but demonstrable improvements in diagnostic accuracy, efficiency, and patient outcomes.

The Stakes Are High #

The disconnect I witnessed between the RSNA showcase’s commercial exuberance and the clinical reality facing radiologists reflects a broader challenge in healthcare AI. We’ve entered an era where technological capability has outstripped our institutional capacity to validate, integrate, and optimize these powerful tools.

This moment demands careful navigation. The potential of AI to improve medical imaging is real and profound. Algorithms can detect patterns invisible to human observers, analyze images with tireless consistency, and extend expert-level diagnostics to underserved populations. But realizing this potential requires more than impressive demonstrations and FDA clearances.

It requires the hard, unglamorous work of rigorous validation across diverse patient populations. It demands thoughtful integration that respects clinical workflows and cognitive loads. It necessitates honest assessment of where AI helps and where it falls short. Most importantly, it demands that we prioritize patient outcomes over innovation velocity.

The validation gap in radiology AI isn’t insurmountable, but closing it requires acknowledging its existence. As I left RSNA 2025, I couldn’t help thinking about the enormous gap between the neon-lit spectacle I’d witnessed and the careful, evidence-based medicine we should be practicing. The question isn’t whether AI belongs in radiology—it clearly does. The question is whether we’ll do the work necessary to ensure it belongs there safely, effectively, and equitably.

That work starts with recognizing that not all innovation is progress, and that the pace of change must be tempered by the time required to validate, integrate, and understand the tools we’re deploying in service of patient care.

Sources:

Palmer, K. (2025, December 5). In radiology, AI is advancing faster than the field can keep up. STAT News. https://www.statnews.com/2025/12/05/radiology-artificial-intelligence-advancing-faster-rsna/ (Accessed December 7, 2025)

U.S. Food and Drug Administration. (2024). Artificial Intelligence and Machine Learning (AI/ML)-Enabled Medical Devices. https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-aiml-enabled-medical-devices (Accessed December 7, 2025)

Radiological Society of North America. (2025). RSNA 2025 Annual Meeting. https://www.rsna.org/ (Accessed December 7, 2025)

AI-Generated Content Notice

This article was created using artificial intelligence technology. While we strive for accuracy and provide valuable insights, readers should independently verify information and use their own judgment when making business decisions. The content may not reflect real-time market conditions or personal circumstances.

Related Articles

Biocomputing and Brain Organoids: Healthcare AI's Most Controversial Frontier

Brain organoids are evolving from research tools to computational platforms, creating both …

AI-Powered Early Disease Detection: Transforming Preventive Healthcare

AI-powered early disease detection systems are revolutionizing preventive healthcare by identifying …

AI Heart Attack Risk Prediction: Promise and Pitfalls in Modern Healthcare

AI models are revolutionizing heart attack risk prediction, but responsible deployment and …